The Creative Brief

Use a hybrid AI/human workflow to make a visual trailer for a podcast.

Last year I was commissioned to make a visual trailer to promote a client’s podcast, a sci-fi series about Seattle one hundred years from now.

The materials supplied to me were snippets from the podcast itself and a script for the trailer itself.

It was up to me, as the sole creative, to come up with a human/AI workflow to make the 90s trailer as I saw fit.

Tools used: ChatGPT, Midjourney, After Effects, ink & paper, Photoshop.

Speeding up every step of the process using AI

A typical workflow to make a visual trailer looks something like:

- Write the script

- Create storyboards

- Design style frames

- Design all the visual assets

- Animate everything.

AI helped speed up each step of the above process.

Script

The script was tightened by repeatedly asking ChatGPT to rewrite drafts in the style of a movie trailer, first iterating on the entire 90s script itself, and then refining each line by using my own sensibility and understanding of how the phrases needed to cohere.

ChatGPT was remarkably good at paring a large script down into an established style (movie trailer). What would usually take a couple hours ended up taking thirty minutes.

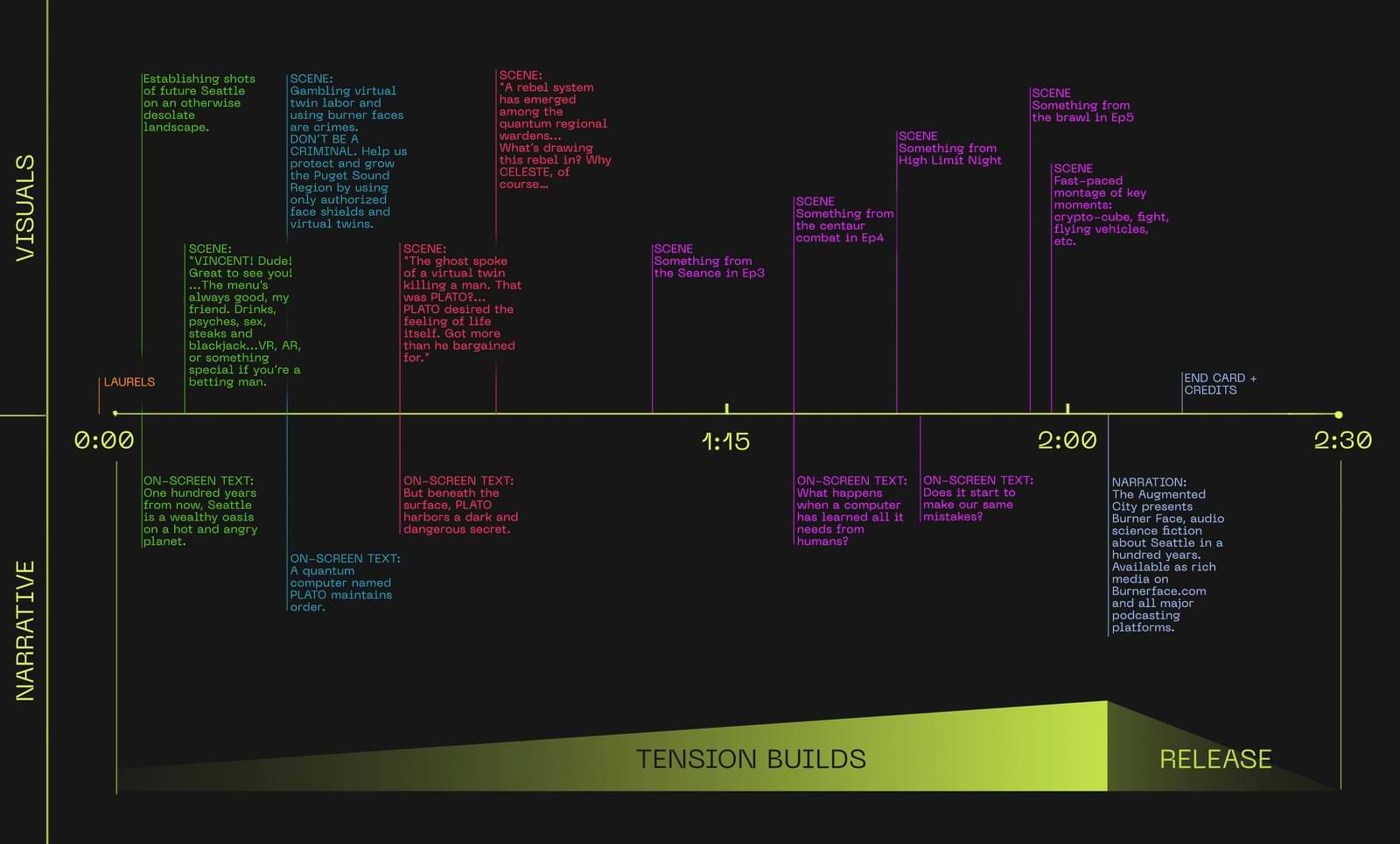

I then built a rough timeline of what visuals should correspond to each beat of the trailer:

Storyboards

I’m more interested in designing characters than I am in environments, so this project was the perfect experiment in figuring out workflows that augmented my (human) skills and interests with AI.

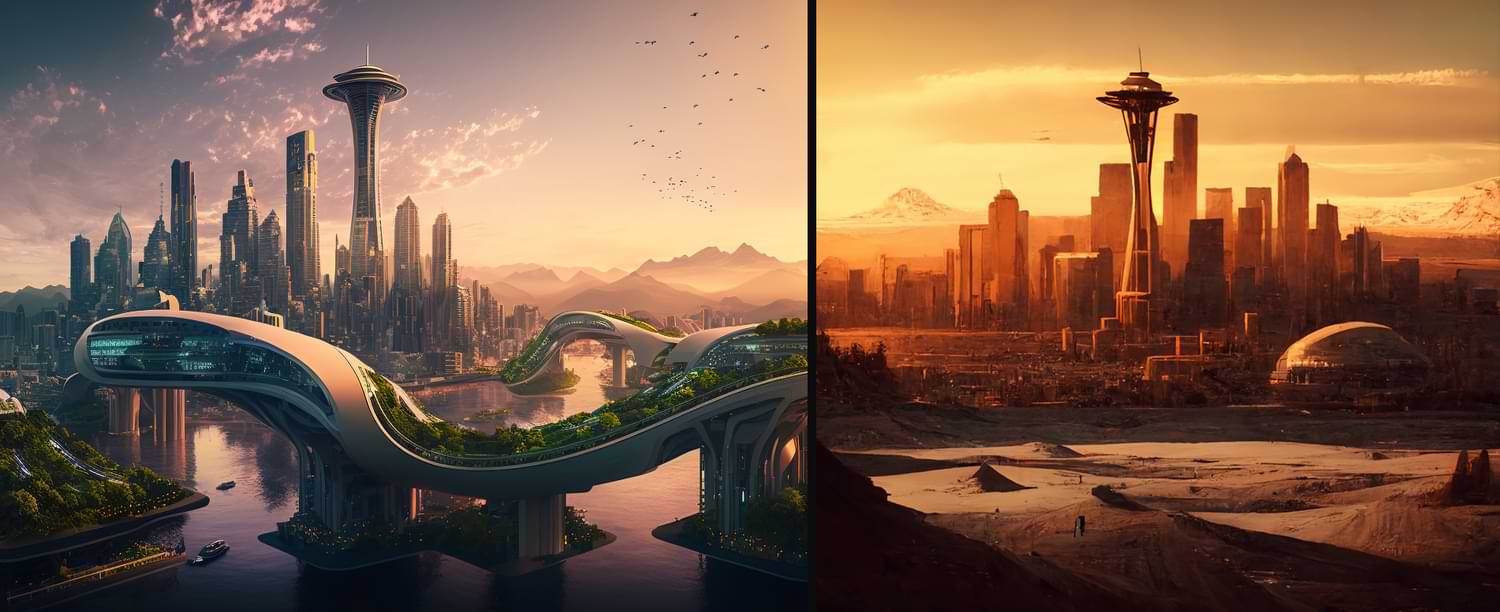

Midjourney was used to rapidly flesh out every visual beat of the trailer, addressing core visual directions very quickly: is future Seattle arid? Wet? What might a futuristic flying car look like?

The dozens of visual questions ranging from obvious to subtle can now all be front loaded through generating ideas using Midjourney.

What’s great is that I can show my client all of these visual ideas in real time—there isn’t a fear of showing them a sketch or something half-baked and risking them not “getting” it.

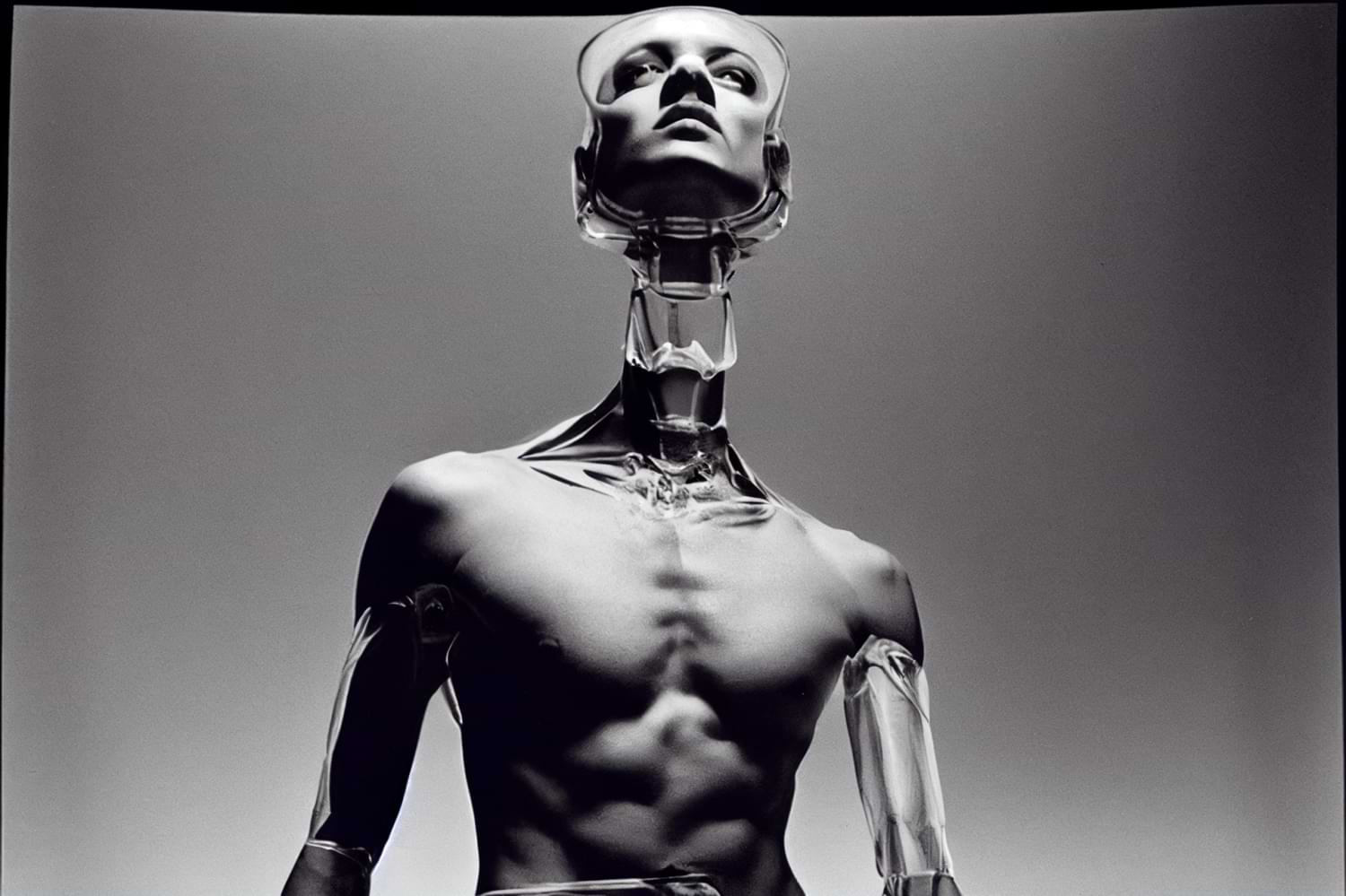

I next blocked in where I wanted the characters to appear in the trailer, and started iterating on what the characters might be wearing in the distant future.

Here, again, Midjourney proved valuable in knocking out a variety of ideas. I also threw in some Helmut Newton photos—which I love for their sexy, alien, strong aesthetic—and mixed them with some future-y wearable tech/body mods for very interesting results:

Visual Asset Designs

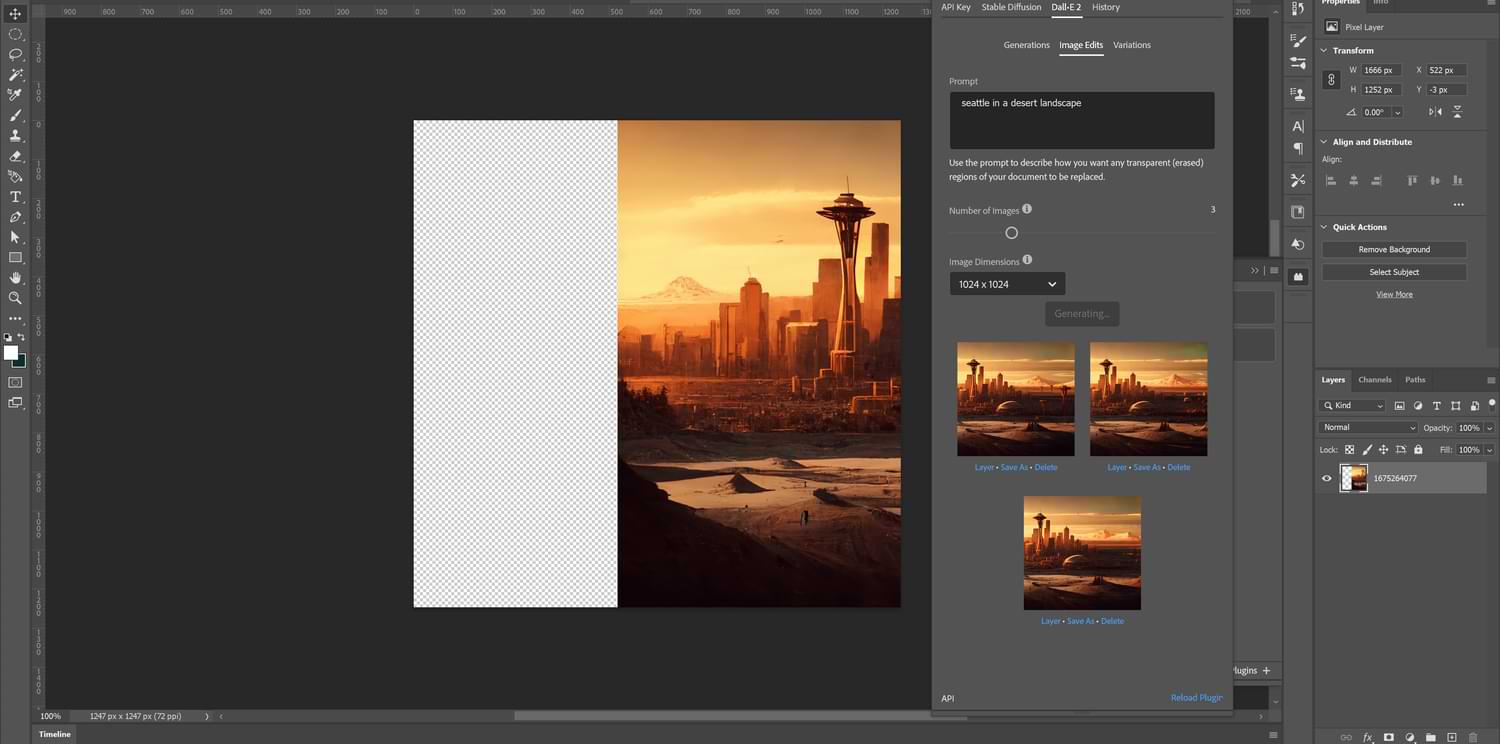

The background and environments all came out of Midjourney. I refined some of the cars on the roads and background buildings using the Stable Diffusion plugin for Photoshop, but the bulk of the visuals were directly generated in Midjourney.

I then cut some of the landscapes and layered them to give everything dimension when tracking in and out of scenes.

The bulk of my time was spent on designing and building out the characters. I used a process of ink and paper, Photoshop and After Effects.

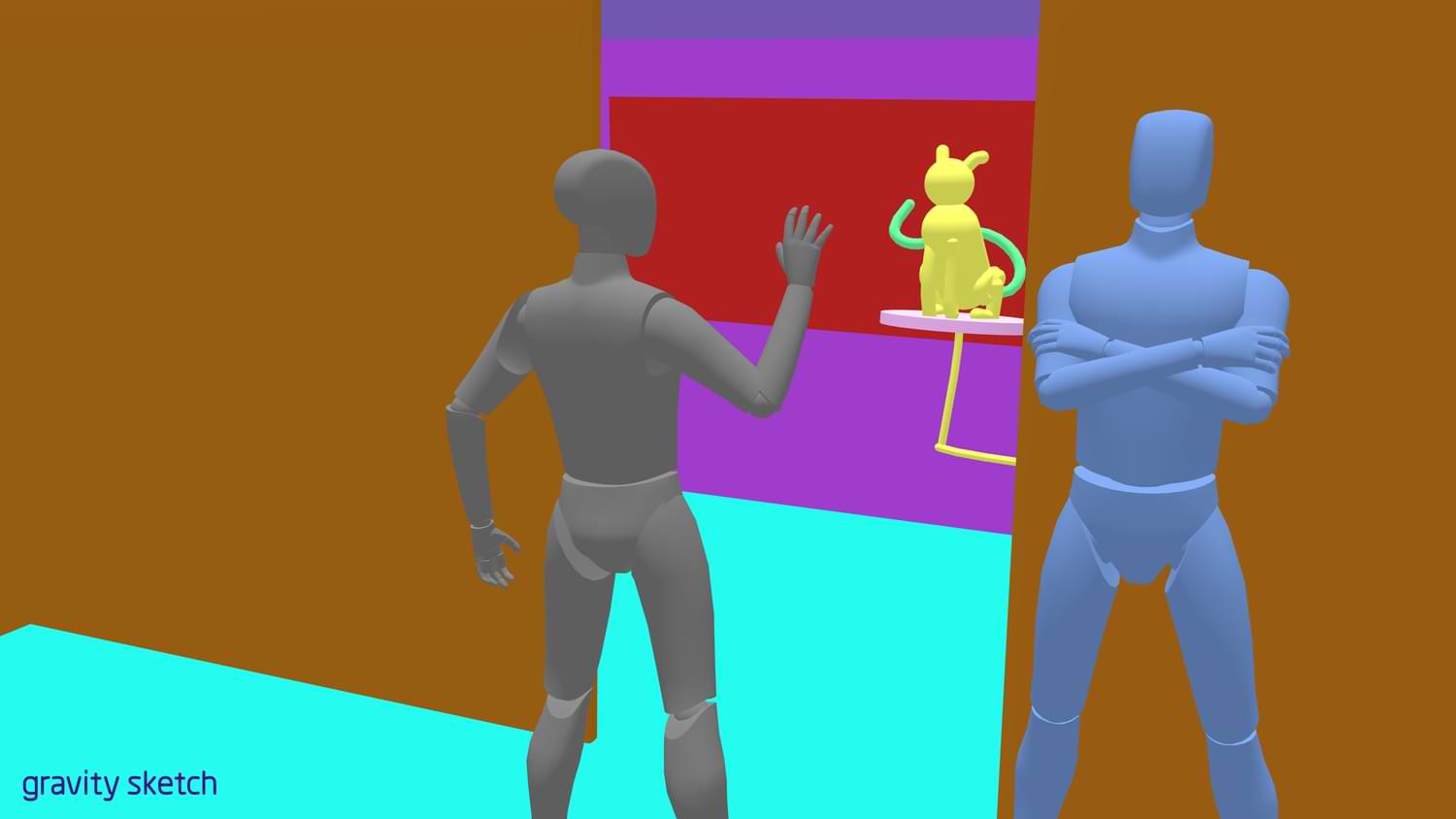

The characters were also designed with their perspective on the AI-generated backgrounds in mind. I used Gravity Sketch to quickly capture the correct perspective to design the character poses accordingly:

Animation

All the backgrounds and characters were then brought together to animate. When using AI tools, there are inevitably going to be some stylistic differences across the visuals generated, so I wanted to build in ways to make sure the end result was cohesive.

One way was through color correction and texture—there is a certain film-y and vibrant color palette running throughout the piece. Another way was through character design. Because the characters were designed by hand, I could make sure they all belonged in the same world, which helped to keep everything consistent even as the AI-generated backgrounds were stylistically quite varied. The last way was through animation: the camera tracking forward through the scenes and text kept everything feeling we were moving through one universe.

Conclusion

Actual production on the piece probably took less than a week. The level of visual richness I could achieve as one person putting this together was eye-opening.

The benefits were obvious: I could quickly generate, iterate and modify any visuals I wanted, and I could share and thus get approval from the client almost in real time. The client can now pay one creative to do the work that traditionally would take an entire team of artists to achieve.

The downside was subtle: although I could generate any number of visuals, the act of typing and re-typing prompts all day felt creatively very empty.

All in all, I enjoyed this experiment in figuring out what a AI/human workflow could look like; this is one step in a new AI-fueled world.